Soccer Tracking - Part 1 - Object Detection and Tracking

For a while I’ve wanted to look at soccer statistics and data. Soccer has lagged behind other sports when it comes to statistics and data analysis because the obvious counting statistics are either not interesting (ex. number of shots doesn’t tell you the quality of the shot) or hard to track. Who wants to watch a game and count every pass played? Most of the interesting questions when it comes to soccer revolve around players’ positioning and how and where the ball moves around the field. Tracking data exists and is what’s behind things like pass and heat maps, but it is not freely available. There are a few small datasets that have been released for research but for the most part the data is controlled by a few companies who charge quite a bit to get access to it.

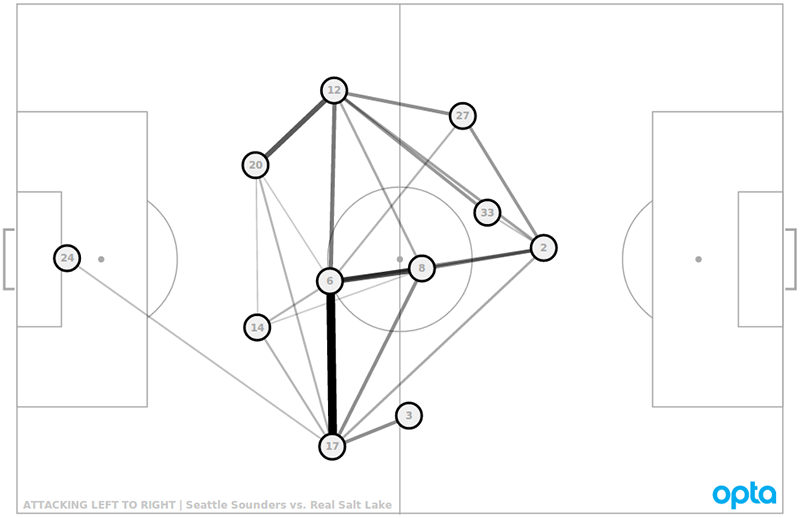

Simple pass map from https://statsbomb.com/articles/soccer/explaining-xgchain-passing-networks/

So why not write some software that can generate tracking data from a soccer game TV broadcast. Soccer TV broadcasts are easy to find for free but they do present a number of challenges. The main challenges are that the camera is constantly panning and zooming and occasionally cutting away for a replay or ad. The data won’t be perfect since we can’t know where every player is at all times, but it should be good enough to generate some heat maps and other basic spatial analysis. Generally the idea is to take a video of a soccer game and for each frame determine which players are visible and record their position on the field. If a player isn’t in the frame or can’t be detected for some other reason then we just won’t record their position for that frame.

So let’s start looking at object tracking. Object tracking is the term in computer vision for detecting and following an object throughout the course of a video and/or across multiple video streams from different cameras. Object tracking relies heavily on object detection, the computer vision problem of identifying specific objects in an image, but differs in that object detection doesn’t deal with determining whether two objects in subsequent frames are the same object or with the change of an objects position over time. Object detection only detects an object in a single frame. However object tracking relies heavily on object detection since tracking uses the output of object detection to kick off its tracking algorithm for each frame.

Example of the ByteTrack object tracking algorithm from https://github.com/ifzhang/ByteTrack

Since object tracking relies so heavily on object detection, we first need a model that can detect soccer players. There are a number of easy to use object detection models and for the first attempt at tracking soccer players I decided to use YOLOv8. YOLOv8 has a number of number of models pretrained on the COCO dataset and it also provides an easy way to train a custom model on your own data.

Output of running YOLOv8 object detection on a soccer game

The pretrained YOLOv8 model does well in detecting players and the ball. It also detects the referees and some fans and labels them as “person” along with the players. One of the downsides of using the pretrained models is that they are only aware of the labels that were in the dataset that they were trained on and may be aware of additional labels that aren’t relevant to a specific use case. In this example you can see some very brief erroneous detections of “tennis racket” and “clock”, two items we know aren’t on a soccer pitch. To create a custom model I sampled a few hundred frames from a couple of soccer games and used roboflow to label them. Now we can train a model with YOLO using this dataset and our model will only know about the classes we’ve taught it (player, ball, referee and manager) and hopefully it will learn that we only care about objects on the pitch.

Object detection using a custom model trained specifically for soccer

The custom model performs much better than the pretrained model. It is able to distinguish between the players and referees, doesn’t detect any fans and also doesn’t detect any tennis rackets or clocks since it doesn’t even know what those object are. The ball tracking isn’t quite as good as the pretrained model and sometimes a single player is detected as being two. Some noise is inevitable and will be dealt with when postprocessing, but this is pretty good for a stab at a custom model only trained on a few hundred images. This model should get better by adding more training data and we can use this version of the model to bootstrap future data labelling.

Now that we have a solid object detection model we need to add object tracking. It might look like the model is already tracking objects, but it is actually just running detection on each frame of the video. After adding a tracking algorithm each object (player, referee, ball) will be assigned an id and the tracking algorithm will determine which detection in subsequent frames is associated with the same id. YOLO implements versions of ByteTrack and BoT-SORT out of the box. I ended up writing my own implementation of both since the YOLO versions don’t implement re-identification or multi-class tracking.

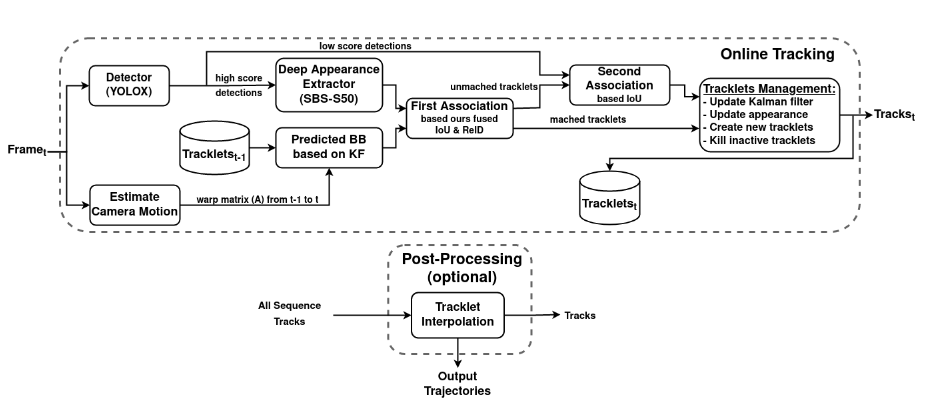

BoT-SORT-ReID pipeline from https://arxiv.org/abs/2206.14651

I will probably go into more detail on BoT-SORT and re-identification in a future post, but the image above shows all of the steps in the algorithm. The high level process of BoT-SORT is:

- Run object detection

- Correct for camera motion

- Predict where tracked objects should be based on their position in the previous frame

- Associate detections in this frame based on our predictions in step 3

- Store objects and tracks so we can continue tracking them in the next frame

BoT-SORT uses a Kalman filter to to predict the position of objects between frames. If we can’t associate a detection in the current frame with one of the tracks that we are following, the track is marked as lost but still stored and can become active again in future frames if we find a detection that matches the track. This helps deal with objects that are occluded by other objects, or go out of the picture due to camera motion or other reasons. Re-identification also helps with this issue and uses the features of the image itself to match a detection back to a track. Using the Kalman filter allows the algorithm to match a detection to previous detections based on position and movement and using re-identification allows matching based on whether the detection looks like previous detections.

![]()

Object tracking using BoT-SORT

After running BoT-SORT on one of the videos we can see that ids are assigned for each player and the algorithm does well tracking players. Ids stay the same throughout the video unless a player goes off camera for an extended period of time. One of the benefits we have when tracking soccer players vs pedestrians in the ByteTrack example clip is that there are are additional constraints on soccer players. There can only ever be one ball, and a maximum of twenty two players and three referees. We also know that players play in specific positions and stay in similar areas of the field throughout the game. Since some reconciling of ids in inevitable due to camera movement causing players to drop out of view, I don’t think its worth it to try to improve the tracking much more and I do think that with postprocessing we should be able to determine which tracking ids should be grouped together to identify a single player. That is definitely one of the bigger challenges yet to solve along with mapping the bounding boxes to field xy coordinates.